Data Preprocessing requires various steps:

https://www.superdatascience.com/machine-learning/

Code: Data Preprocessing

# Importing the libraries

import numpy as np

import matplotlib.pyplot as plt

import pandas as pd

# numpy: Library for the Python programming language, adding support for large, Multi-dimensional Arrays and Matrices, along with a large collection of high-level mathematical functions to operate on these arrays.

# matplotlib: Plotting library for the Python programming language and its numerical mathematics extension NumPy. It provides an object-oriented API for embedding plots into applications using general-purpose GUI toolkits like Tkinter, wxPython, Qt, or GTK+.

#pandas: Is a software library written for the Python programming language for Data Manipulation and Analysis. In particular, it offers data structures and operations for manipulating numerical tables and time series.

# Importing the dataset

dataset = pd.read_csv('Data.csv')

X = dataset.iloc[:, :-1].values

y = dataset.iloc[:, 3].values

# Taking care of missing data

from sklearn.preprocessing import Imputer

imputer = Imputer(missing_values = 'NaN', strategy = 'mean', axis = 0)

imputer = imputer.fit(X[:, 1:3])

X[:, 1:3] = imputer.transform(X[:, 1:3])

# Encoding categorical data

# Encoding the Independent Variable

from sklearn.preprocessing import LabelEncoder, OneHotEncoder

labelencoder_X = LabelEncoder()

X[:, 0] = labelencoder_X.fit_transform(X[:, 0])

# The above code of line converts X into 0,1 & 2

# However this may create an interpretation that Spain is greater than France and Germany > Spain

# Thus to prevent this,lets have three seperate columns for France, Spain & Germany

onehotencoder = OneHotEncoder(categorical_features = [0])

X = onehotencoder.fit_transform(X).toarray()

# Encoding the Dependent Variable

labelencoder_y = LabelEncoder()

y = labelencoder_y.fit_transform(y)

# Splitting the dataset into the Training set and Test set

from sklearn.cross_validation import train_test_split

X_train, X_test, y_train, y_test = train_test_split (X, y, test_size = 0.2, random_state = 0)

# Feature Scaling

# Why Feature Scaling

# Ans: Most of ML models are dependent on Euclidean Distance between two points (P1,P2)

# However here Salary will dominate Age, so Eucliean Distance will not be ideal

# Thus to bring both variables on the same scale, preferred is 'Feature Scaling'

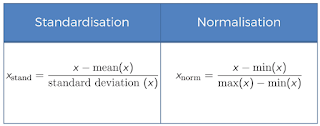

# Feature Scaling are of Two Types: Stanaarization & Normalisation

from sklearn.preprocessing import StandardScaler

sc_X = StandardScaler()

X_train = sc_X.fit_transform(X_train)

X_test = sc_X.transform(X_test)

Concepts:

Euclidean Distance:

Feature Scaling Types:

Hope this helps!

Arun Manglick

- Importing Libraries

- Importing Dataset

- Missing Data

- Categorical Data

- Splitting Dataset - Training/Test

- Feature Scaling

https://www.superdatascience.com/machine-learning/

Code: Data Preprocessing

# Importing the libraries

import numpy as np

import matplotlib.pyplot as plt

import pandas as pd

# numpy: Library for the Python programming language, adding support for large, Multi-dimensional Arrays and Matrices, along with a large collection of high-level mathematical functions to operate on these arrays.

# matplotlib: Plotting library for the Python programming language and its numerical mathematics extension NumPy. It provides an object-oriented API for embedding plots into applications using general-purpose GUI toolkits like Tkinter, wxPython, Qt, or GTK+.

#pandas: Is a software library written for the Python programming language for Data Manipulation and Analysis. In particular, it offers data structures and operations for manipulating numerical tables and time series.

# Importing the dataset

dataset = pd.read_csv('Data.csv')

X = dataset.iloc[:, :-1].values

y = dataset.iloc[:, 3].values

# Taking care of missing data

from sklearn.preprocessing import Imputer

imputer = Imputer(missing_values = 'NaN', strategy = 'mean', axis = 0)

imputer = imputer.fit(X[:, 1:3])

X[:, 1:3] = imputer.transform(X[:, 1:3])

# Encoding categorical data

# Encoding the Independent Variable

from sklearn.preprocessing import LabelEncoder, OneHotEncoder

labelencoder_X = LabelEncoder()

X[:, 0] = labelencoder_X.fit_transform(X[:, 0])

# The above code of line converts X into 0,1 & 2

# However this may create an interpretation that Spain is greater than France and Germany > Spain

# Thus to prevent this,lets have three seperate columns for France, Spain & Germany

onehotencoder = OneHotEncoder(categorical_features = [0])

X = onehotencoder.fit_transform(X).toarray()

# Encoding the Dependent Variable

labelencoder_y = LabelEncoder()

y = labelencoder_y.fit_transform(y)

# Splitting the dataset into the Training set and Test set

from sklearn.cross_validation import train_test_split

X_train, X_test, y_train, y_test = train_test_split (X, y, test_size = 0.2, random_state = 0)

# Feature Scaling

# Why Feature Scaling

# Ans: Most of ML models are dependent on Euclidean Distance between two points (P1,P2)

# However here Salary will dominate Age, so Eucliean Distance will not be ideal

# Thus to bring both variables on the same scale, preferred is 'Feature Scaling'

# Feature Scaling are of Two Types: Stanaarization & Normalisation

from sklearn.preprocessing import StandardScaler

sc_X = StandardScaler()

X_train = sc_X.fit_transform(X_train)

X_test = sc_X.transform(X_test)

Concepts:

Euclidean Distance:

Feature Scaling Types:

Hope this helps!

Arun Manglick

No comments:

Post a Comment